Links to This Note1

SHAP

SHAP (Shapley Additive Explanations) is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allocation with local explanations using the classic Shapley values from game theory and their related extensions (see papers for details and citations). Welcome to the SHAP documentation -- SHAP latest documentation

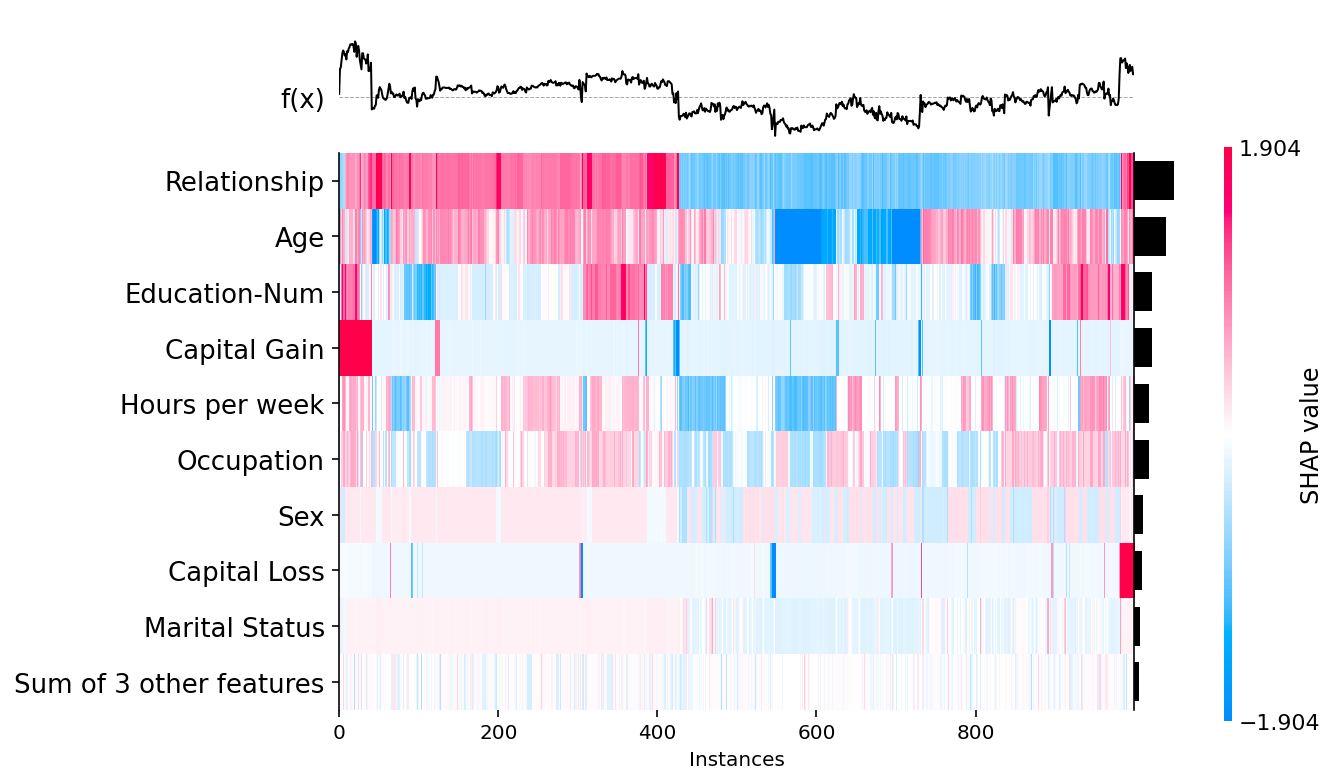

shap.plots.heatmap(shap_values[:1000])